|

Hi Daniel,

Your history syncer spikes cane be caused by housekeeper problems.

Can you attach the output of the following query :

regards,

Kaspars

|

|

Hi Kaspars,

thanks for the fast reply. Here's the output:

+----------+--------+

| count(*) | source |

+----------+--------+

| 183884 | 0 |

| 11835827 | 1 |

| 368808 | 2 |

| 7279489 | 3 |

+----------+--------+

Due to the perfomance issues we decreased the retention times of events yesterday and since that hasn't fixed it, we deleted 17 million events from object=4 and source=3 that were older than 60 days (didn't know there was a fix for the events query ZBX-13275, found it today). delete from events where clock < 1510672714 AND object = 4 AND source = 3 (in chunks of 1000). After that we also did a optimize/recreate. We did the optimize also on all other tables except history*/trends* (too big to do it on weekdays)

Trigger data storage period: 60d

Internal data storage period: 30d

Network discovery data storage period: 14d

Auto-registration data storage period: 2d

Regards,

Daniel

|

|

You can set the Internal data storage period to : 1d (its only Zabbix internal data)

at this moment, which exact Zabbix Server version are you running - 3.4.5 or 3.4.6 ?

Regards,

Kaspars

|

# zabbix_server -V

zabbix_server (Zabbix) 3.4.6

Revision 76823 15 January 2018, compilation time: Jan 15 2018 09:34:47

From the official Repository deb http://repo.zabbix.com/zabbix/3.4/debian jessie main

I'll set the internal data storage period to 1d.

We are tracking the row count of the events table and had over 30 million rows (no per object type statistics available) for a few months now and no issues at all.

|

|

I can see many select distinct itemid from trends where clock>=1516114800 and (itemid [...] queries right now. I don't think I ever saw those before.

Many of those queries have a long runtime of a few minutes (right now I can see up to 6 minutes).

We have 700 mio rows in trends_uint and 300 mio rows in trends, both with 32 "HASH(`itemid` )" partitions. The number of rows hasn't changed much in the last months.

Are those queries "new"?

|

|

You can try to downgrade to previous zabbix version.

|

|

Probably caused by ZBX-11426 and is a duplicate of ZBX-12758. Queries you've mentioned aren't new. I agree with kaspars.mednis that housekeeper is your main problem.

|

|

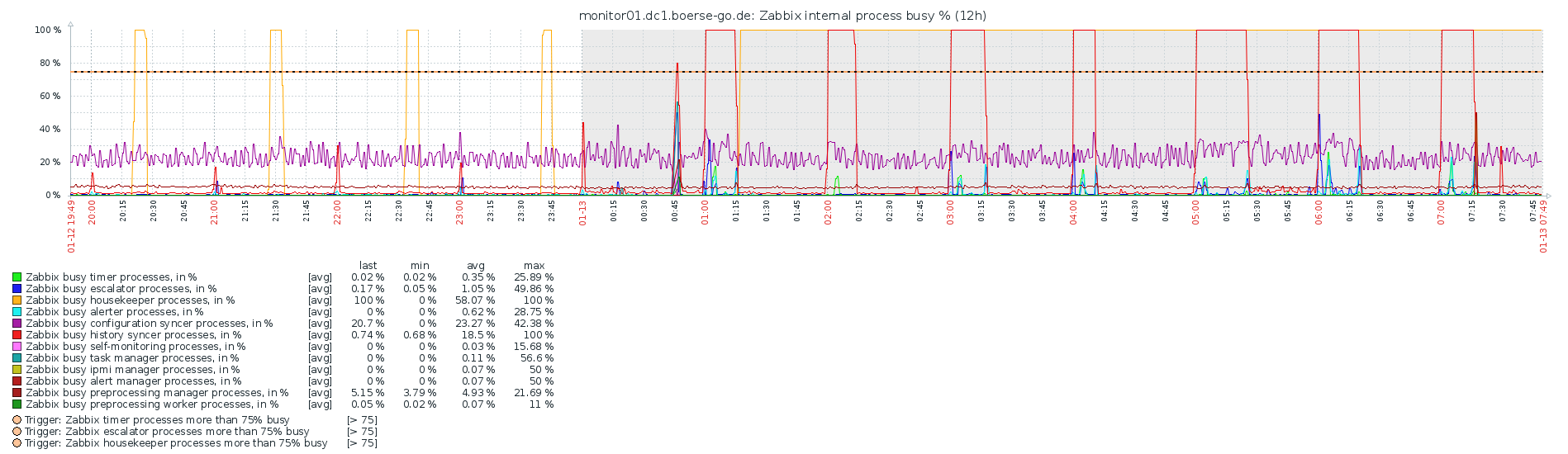

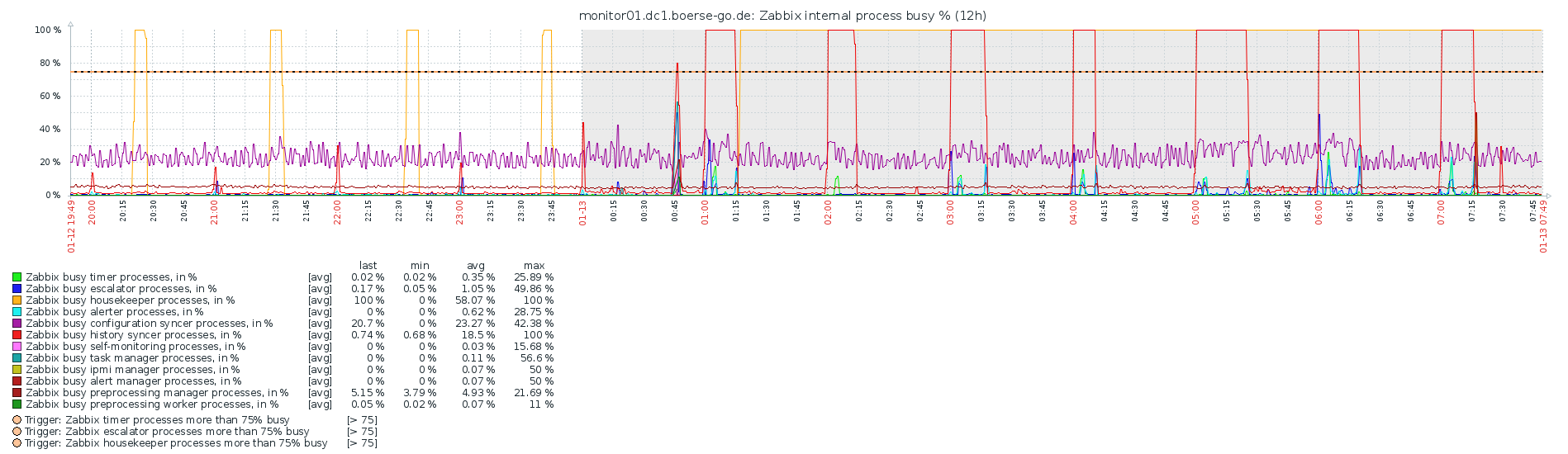

On 1st graph:

it's noticeable that problem started at 01 AM - start of an hour (ding dong - trends calculation, very first, which is harder that next ones) and housekeeper was sleeping that time for 30 minuted after restart.

So, housekeeper is not related here.

|

|

As you said, first trend calculation after restart is harder than subsequent ones, and we don't know how it looked like before upgrade to 3.4.5 (unless reporter provides a picture of restart without upgrade). So I am ignoring first syncer spike. The first anomaly after upgrade is 100% load of housekeeper.

|

|

Ok, point accepted!

Also, very 1st housekeeper activity is also, btw, harder that next ones, because it needs to evaluate existing history/trends for each itemid. Corresponding SQLs are executed only once, and they are heavy.

Also, we see that syncers were sensitive on each hour start (spikes) before upgrade. It's quite rare picture. Usually it's not noticeable at all.

|

|

Thanks for your help. I did a downgrade and will check if the second and later housekeeper and syncer will show the same behavior. If yes, something with the database is wrong and it was some bad luck that it happened at the same time as the upgrade or a small schema change did something.

I'll report back tomorrow.

Btw. the first graph shows the behavior before the upgrade. All the weekdays are statistics of 3.4.3, the weekend is already 3.4.5

|

|

But there are no restarts before upgrade seen in those graphs. We are talking about the first hour after restart before and after the upgrade. We see after picture, before is missing. Perhaps you can still have such data in history, would be nice if you could attach a screenshot.

By the way, disabling a housekeeper in 3.4.5 and checking how it affects (or not) history syncer would be a nice insight as well.

|

|

Hi,

Just to report I'm having exactly same issue here after upgrading from 3.4.5 to 3.4.6.

Attaching the Zabbix chart and reporting the log, after upgrade, is full of this:

7553:20180117:065323.721 [Z3005] query failed: [1205] Lock wait timeout exceeded; try restarting transaction

[...]

7539:20180117:075040.420 slow query: 196.468197 sec, "delete from history_uint where itemid=76327 limit 500000"

[...]

7557:20180117:075509.466 slow query: 7.912767 sec, "select distinct itemid from trends_uint where clock>=1516165200 and itemid in (39512,43320,43324,433

vso slow query is the one that delete history for the item so I don't think it' related to anything, it's just slow operation, to delete 500K history entries in one go.

You can try lower MaxHousekeeperDelete

|

|

Could you please be so kind and mention exact housekeeper configuration you had before and after upgrade ? Does reverting to old configuration solve the issue ?

|

|

@Vladislav,

I hadn't change the housekeeper configuration at all.

Actually it's 365d for all elements, except for history, where it's 15d.

I still haven't revert to version 3.4.5: system is still usable though very slow.

|

|

zabbix_processes_3.4.3_restart.png shows the the restart of 3.4.3 at 8:50 and the expected peaks in the first two full hours (9:00 and 10:00), 11:00 is already calm like it should be (with a small and short peak). shows the the restart of 3.4.3 at 8:50 and the expected peaks in the first two full hours (9:00 and 10:00), 11:00 is already calm like it should be (with a small and short peak).

The whole graph shows 3.4.3 since I downgraded yesterday evening.

Another thing if you compare the peaks, the graphs in the description are with 20 DBSyncers, this one with 100. I thought it could help to get the 10 minutes delay with 3.4.6 down, but nothing changed. Forgot to turn it down before downgrading yesterday.

I'll upgrade again now, disable the housekeeper and send you the graph with 3 hours after the change.

|

|

Thank you, thetuxkeeper! So the first hourly spike of history syncer is as bad in 3.4.5 as it was in 3.4.3 (100% for ~15 minutes) and my assumption was correct. Housekeeper is still our main suspect.

Looking forward to get some updates from you!

|

|

zabbix_processes_3.4.6_restart.png

I did the restart (and upgrade) at 13:43. The peaks of the history syncer don't stop and nodata triggers are complaining during that time. The delay was about 11 minutes, at 17:11 most nodata triggers were ok again. Same at 16:00 peak.

The housekeeper is deactivated/in manual mode "/usr/sbin/zabbix_server: housekeeper [waiting for user command]"

|

|

Update:

Seems that it took the night to stabilize the syncer and housekeeper processes:

Most likely what happened was that during the upgrade I somehow set 500000 as MaxHousekeeperDelete: setting the value back to default, the system is running normal after some cycles of intense housekeeping.

Thanks for your help, appreciated

Giuseppe

|

|

Difference between zabbix_processes_3.4.3_restart.png and zabbix_processes_3.4.6_restart.png and zabbix_processes_3.4.6_restart.png is alarming. I should point out that the issue was encountered after 3.4.3 to 3.4.5 upgrade. Similar pictures attached in is alarming. I should point out that the issue was encountered after 3.4.3 to 3.4.5 upgrade. Similar pictures attached in ZBX-13341 blaming 3.4.4 to 3.4.6 upgrade. So 3.4.3 and 3.4.4 are OK and 3.4.5 and 3.4.6 are not.

Have we done something in 3.4.5 that could have increased MySQL/MariaDB memory consumption? Trend synchronization is very sensitive to it.

vso I know it's a long shot but ZBXNEXT-4002 aka Elasticsearch was implemented in 3.4.5

glebs.ivanovskis I couldn't find anything more related in ChangeLog. Seems plausible.

|

|

We increased the innodb_buffer_pool_size from 8G to 12G (on 16.1.) and the RAM from 13G to 18G of the dedicated database vm (on 14.1.). But since that hasn't changed anything at all, I forgot to mention it. So both zabbix_processes_3.4.6_restart.png and zabbix_processes_3.4.3_restart.png and zabbix_processes_3.4.3_restart.png were with the increased innodb_buffer_pool_size (the other graphs are with 8G innodb buffer pool). were with the increased innodb_buffer_pool_size (the other graphs are with 8G innodb buffer pool).

|

|

This is an important comment! Thank you, thetuxkeeper!

Looks more and more like some important piece of trend sync logic was lost with ZBXNEXT-4002 and now every trend sync is as heavy as the first one.

|

|

Fixed in development branch svn://svn.zabbix.com/branches/dev/ZBX-13343

|

|

Successfully tested

|

|

Released in:

Note that only 3.4 branch was affected by this bug.

|

|

some communication seems to be lost - what was the issue in the end ?

wiper Regression.

|

|

Is there anything we can to to mitigate this effect without moving to an RC/alpha? We are currently scheduling our upgrade from 3.2 to 3.4.5. In 3.2 we already have delayed values, and one of the basis for our upgrade to 3.4.5 is improved config syncer/housekeeper behaviour. Thanks

|

|

I'm afraid the only thing would be backporting the patch (it was quite simple fix).

|

|

It's impossible to use 3.4.5, 3.4.6 because of this - in my case, all processing stuck and nothing works (3k nvps). In my opinion you should release 3.4.7 bugfix release even if it only will contain this patch and nothing else - there should not be such bug in latest published version of the product.

|

|

Paskal, 3.4.7rc1 will be released on this week. As workaround you can temporary disable housekeeping of the events.

Sorry, this is another problem. This workaround will not help. Today will release 3.4.7rc1.

|

|

Released in https://sourceforge.net/projects/zabbix/files/ZABBIX%20Release%20Candidates/3.4.7rc1/

|

|

+1. Google send me here. I don't suppose someone has a 3.4.7rc1 mysql binary somewhere?

Edit. I made some.

https://www.dropbox.com/sh/w7bdnndcqauzvg8/AABC94a90q-AzLHvg3YIK1Sqa?dl=0

|

|

3.4.7 must be available.

|

Generated at Tue Jun 17 07:03:30 EEST 2025 using Jira 9.12.4#9120004-sha1:625303b708afdb767e17cb2838290c41888e9ff0.