|

Hello, Robin,

Could you, please elaborate how the host group name from macro has been generated? Are you using single network discovery rule to implement this scenario? Please add screen of network discovery.

Regards,

|

|

Sorry for the late reply, I was on vacation  .. ..

It seems that I did not make myself clear. It is not a network discovery scenario but a host prototype defined in a LLD definition (in a template assigned to) a host.

Specifically in my case I monitor an Oracle VM Manager (= similar to vSphere vCenter in vmware) to discover all OVS servers (= similar to ESXi servers for vmware) running within that environment. Those OVS's are then added to Zabbix using a host prototype in the template assigned to the OVM manager. Similar to how vmware esx servers are discovered but with external scripts for the communication with OVM API.

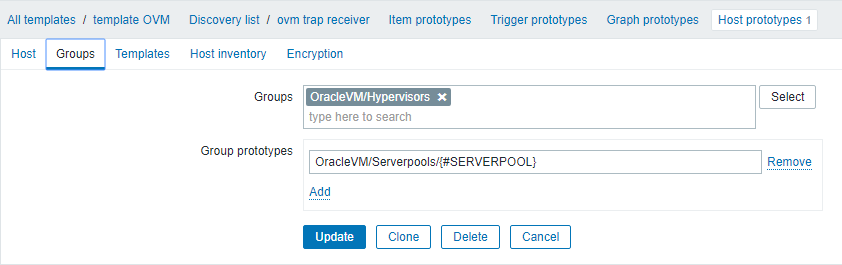

In this Host prototype I defined a Group prototype containing the name of the Server Pool (= similar to vSphere clusters) the discovered OVS is in .

The problem encountered is that an OVS was moved to another Server Pool, and before discovery could pick that up, the OVS host in Zabbix was manually moved to the new Server Pool-group created by a group prototype of another OVS-host that was already in that Server Pool.

Then discovery kicked in, noticed the change of Server Pool for the host and Zabbix tried to move the discovered host to the correct group, however the host was already in that group due to the manual move and discovery failed to notice that, resulting in the error described above.

Hope this clarifies the problem ?

|

|

Hello, Robin!

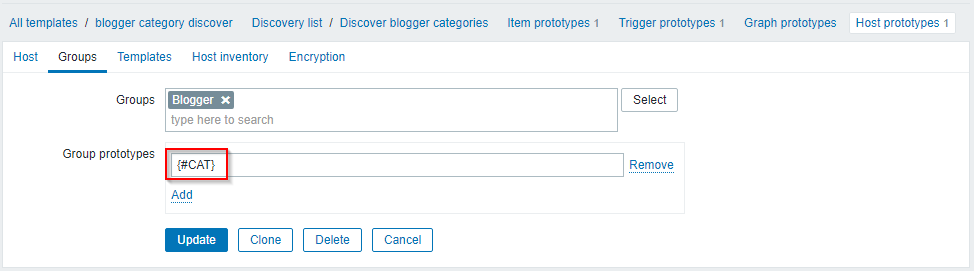

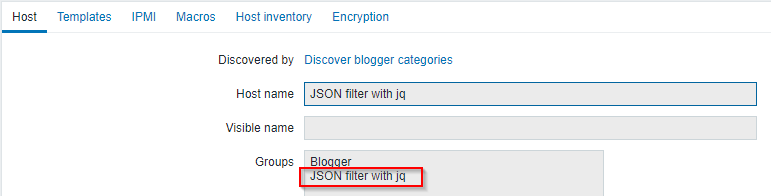

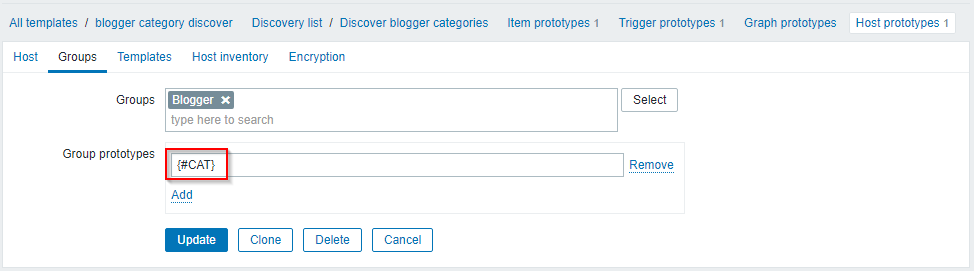

I've prepared the quite the same scenario to generate host prototype + attaching host prototype group using {#CAT} which are coming from LLD. Just to be on the same page I want to clarify we are talking about this field:

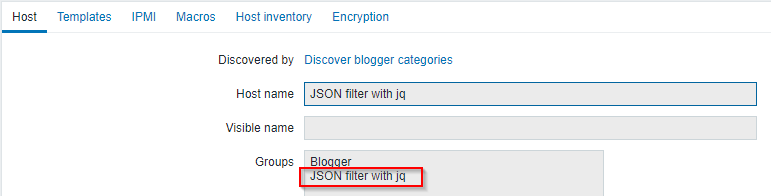

After that host prototype is strictly tied to host group:

Wondering how you were able to move host (coming from host prototype) to a different group. Did you use other tools than Web UI?

Regards,

Aigars

|

|

Hello Aigars,

We are on the same page, that is exactly what I do.

However, your question leaves me puzzled as indeed I now can't change the group of a discovered host. And I definitely didn't use anything else than the Web UI.

I also absolutely don't remember doing anything special to manually move that host..And I know for sure that it was initially discovered in another group, and when that error started showing up in the Zabbix log the host was already in the new group in which discovery tried to move the host to, hence stopping.

Was the lockdown of the groups in discovered hosts a recent change in Zabbix ? (it's possible that, at the time of moving the host, I was using a minor version or 2 less than 3.4.11)

|

|

Hi, Robin,

Groups for host prototypes has been strictly attached as far as I remember Zabbix. A more explanatory thing may be that the host has been cloned using [Clone] or [Full clone] button. Not a very welcome thing to do with hosts coming from host prototype. In this case, the person knows what he is trying to accomplish and the LLD gets disabled after all or the template gets unlinked. For now, I would terribly suggest to find out those separated hosts and remove them.

Regards,

|

|

Ok.. now I could really simulate it...

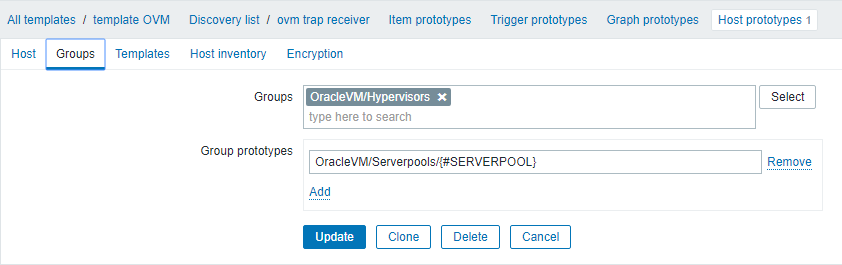

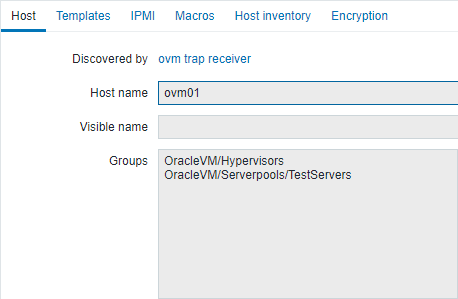

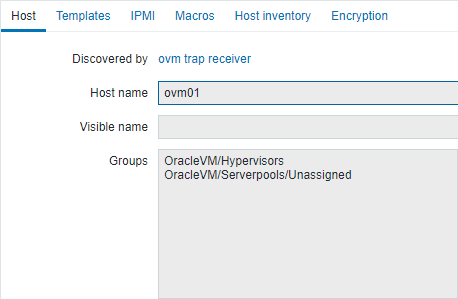

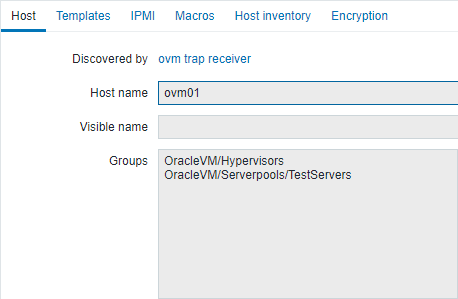

In my case: I have an OVM server 'ovm01' sitting in serverpool 'TestServers'

LLD puts this server in groups

- OracleVM/Hypervisors

- OracleVM/Serverpools/TestServers (where 'TestServers' is the result of a LLD Macro {#SERVERPOOL})

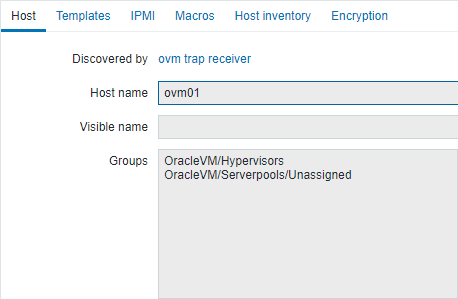

I move OVM server 'ovm01' to Unassigned -> LLD moves the host to the groups:

- OracleVM/Hypervisors

- OracleVM/Serverpools/Unassigned (where 'Unassigned' is the result of a LLD Macro {#SERVERPOOL})

I now move OVM server 'ovm01' back to serverpool 'TestServers' -> LLD stops working and fails with "Duplicate entry for key 'hosts_groups_1'"

So definitely a bug in Zabbix..

|

|

Again, this is the configuration:

So I executed one discovery cycle to generate the host group "OracleVM/Serverpools/TestServers":

After the second cycle the host went to another group:

And I can move back the host to the first group but there is no LLD error. I am trying this on 3.4.14. Please try to update. Is the OVM host monitored directly from the main server (or through proxy)? How many variables LLD have? What "Keep lost resources period" you have in discovery? Would you mind to attach the template you are using?

Regards,

|

|

I upgraded zabbix to 3.4.14 and retried. However, the same problem remains. I move the host to a new pool, zabbix LLD moves the host to the according new group. Then moving the host back, results in the duplicate entry -error.

The OVM host is indeed monitored through a proxy.

Keep lost resources period is set to 30d however that should not be related with this problem as the host does not disappear, only changes?..

These are the macro's I get with LLD:

"{#OVMM_SERVER_ID}":"4c.4c.45.44.00.4b.36.10.80.58.c2.c0.4f.43.35.4a",

"{#OVMM_SERVER_NAME}":"ovm01",

"{#OVMM_SERVER_IP}":"XXX.XXX.XXX.XXX",

"{#OVMM_SERVER_HOSTNAME}":"ovm01.domain.be",

"{#OVMM_SERVER_SERVERPOOL_ID}":"0004fb0000020000a84fc28506e67b9f",

"{#OVMM_SERVER_SERVERPOOL_NAME}":"TestPool",

"{#OVMM_SERVER_LOCAL_FILESERVER_ID}":"0004fb00000900001a95d04b1b89d455",

"{#OVMM_SERVER_LOCAL_FILESERVER_NAME}":"Local FS ovm01.domain.be"

The template calls item key ovmm.discovery["Server"] which is a UserParameter defined on the Agent of the OVM Manager.

Please find the template for the OVM Manager, containing the Server discovery rule 'OVM Server Discovery', attached.

|

|

Hi,

Thanks for the MACROS. It seems like in both LLD cycles only two fields are changing: {#OVMM_SERVER_SERVERPOOL_NAME} and {#OVMM_SERVER_SERVERPOOL_ID}. Please confirm that, by attaching the second part of the scenario with "Unassigned" case.

Currently, I cannot use a template, please attach "Template VM Oracle VM Shared Repository" as well.

Is it an active proxy in your case? What is "ConfigFrequency="? What is the version of proxy?

Regards,

|

|

After change to Unassigned :

"{#OVMM_SERVER_ID}":"4c.4c.45.44.00.4b.36.10.80.58.c2.c0.4f.43.35.4a",

"{#OVMM_SERVER_NAME}":"ovm01",

"{#OVMM_SERVER_IP}":"XXX.XXX.XXX.XXX",

"{#OVMM_SERVER_HOSTNAME}":"ovm01.domain.be",

"{#OVMM_SERVER_SERVERPOOL_ID}":"Unassigned",

"{#OVMM_SERVER_SERVERPOOL_NAME}":"Unassigned",

"{#OVMM_SERVER_LOCAL_FILESERVER_ID}":"0004fb00000900001a95d04b1b89d455",

"{#OVMM_SERVER_LOCAL_FILESERVER_NAME}":"Local FS ovm01.domain.be"

The proxy is indeed configured as an Active proxy. At time of the bug report, it was version 3.4.10. Currently it is 3.4.12 (latest available for SUSE: https://build.opensuse.org/package/show/server:monitoring:zabbix/zabbix34)

ConfigFrequency is set to default (I assume 3600)

I'll attach "Template VM Oracle VM Server" and "Template VM Oracle VM Shared Repository" as well.

|

|

Thank you, Robin! Now the template works. I was able to simulate your case very closely by converting LLD item to "Zabbix trapper":

# one discovery

zabbix_sender -z 127.0.0.1 -s ovm -k 'ovmm.discovery["Server"]' -o '{"data":[{"{#OVMM_SERVER_ID}":"4c.4c.45.44.00.4b.36.10.80.58.c2.c0.4f.43.35.4a", "{#OVMM_SERVER_NAME}":"ovm01", "{#OVMM_SERVER_IP}":"XXX.XXX.XXX.XXX", "{#OVMM_SERVER_HOSTNAME}":"ovm01.domain.be", "{#OVMM_SERVER_SERVERPOOL_ID}":"0004fb0000020000a84fc28506e67b9f", "{#OVMM_SERVER_SERVERPOOL_NAME}":"TestPool", "{#OVMM_SERVER_LOCAL_FILESERVER_ID}":"0004fb00000900001a95d04b1b89d455", "{#OVMM_SERVER_LOCAL_FILESERVER_NAME}":"Local FS ovm01.domain.be"}]}'

# next discovery

zabbix_sender -z 127.0.0.1 -s ovm -k 'ovmm.discovery["Server"]' -o '{"data":[{"{#OVMM_SERVER_ID}":"4c.4c.45.44.00.4b.36.10.80.58.c2.c0.4f.43.35.4a", "{#OVMM_SERVER_NAME}":"ovm01", "{#OVMM_SERVER_IP}":"XXX.XXX.XXX.XXX", "{#OVMM_SERVER_HOSTNAME}":"ovm01.domain.be", "{#OVMM_SERVER_SERVERPOOL_ID}":"Unassigned", "{#OVMM_SERVER_SERVERPOOL_NAME}":"Unassigned", "{#OVMM_SERVER_LOCAL_FILESERVER_ID}":"0004fb00000900001a95d04b1b89d455", "{#OVMM_SERVER_LOCAL_FILESERVER_NAME}":"Local FS ovm01.domain.be"}]}'

Combined these queries multiple times from active proxy and there were no LLD errors.

Apparently, we have a big incompatibility issue with version 3.4.10. Please try to reproduce scenario if you now you migrate away from 3.4.10.

Regards,

|

|

Yes, as mentioned in my last comment I have now upgraded the 3.4.10 proxy to v3.4.12; however, the problem still is unchanged.

1st Discovery of the host -> TestPool works

TestPool -> Unassigned works

Unassigned -> Testpool: LDD stops working:

[Z3005] query failed: [1062] Duplicate entry '10514-37' for key 'hosts_groups_1' [insert into hosts_groups (hostgroupid,hostid,groupid) values (766,10514,37),(767,10514,25);

for reference:

- hostid = 10514 -> ovm01

- groupid = 37 -> Oracle VM/Hypervisors

- groupid = 25 -> Oracle VM/ServerPools/TestPool

So it seems to me that the problem here is not the group using the macro, but the static group, which doesn't change..?

Currently, what I do to correct the problem is manually delete the LLD discovered host which allows LLD to rediscover and create the host again.

|

|

Hello Robin,

It's getting harder than I thought to debug the issue. I must ask for you to try out my designed template to replicate the problem on your side.

Please:

1) import test-Template-VM-Oracle-VM.xml this will generate templates: this will generate templates:

test Template VM Oracle VM Manager

test Template VM Oracle VM Server

test Template VM Oracle VM Shared Repository

2) create dummy host (with ip 0.0.0.0 or something) with the name testovm and assign "test Template VM Oracle VM Manager". set the host to be monitored by proxy

3) wait 1 minute for the config to flush down to the database

4) reload config on proxy

zabbix_proxy -R config_cache_reload

5) send the first LLD cycle to the host

# yum install zabbix-sender

# apt-get install zabbix-sender

zabbix_sender -z 127.0.0.1 -s testovm -k 'ovmm.discovery["Server"]' -o '{"data":[{"{#OVMM_SERVER_ID}":"4c.4c.45.44.00.4b.36.10.80.58.c2.c0.4f.43.35.4a", "{#OVMM_SERVER_NAME}":"ovm01", "{#OVMM_SERVER_IP}":"XXX.XXX.XXX.XXX", "{#OVMM_SERVER_HOSTNAME}":"ovm01.domain.be", "{#OVMM_SERVER_SERVERPOOL_ID}":"0004fb0000020000a84fc28506e67b9f", "{#OVMM_SERVER_SERVERPOOL_NAME}":"TestPool", "{#OVMM_SERVER_LOCAL_FILESERVER_ID}":"0004fb00000900001a95d04b1b89d455", "{#OVMM_SERVER_LOCAL_FILESERVER_NAME}":"Local FS ovm01.domain.be"}]}'

6) make sure you receive "processed: 1; failed: 0;" on the output.

7) observe the host ovm01 appears inside the frontend

8) run next discovery

zabbix_sender -z 127.0.0.1 -s testovm -k 'ovmm.discovery["Server"]' -o '{"data":[{"{#OVMM_SERVER_ID}":"4c.4c.45.44.00.4b.36.10.80.58.c2.c0.4f.43.35.4a", "{#OVMM_SERVER_NAME}":"ovm01", "{#OVMM_SERVER_IP}":"XXX.XXX.XXX.XXX", "{#OVMM_SERVER_HOSTNAME}":"ovm01.domain.be", "{#OVMM_SERVER_SERVERPOOL_ID}":"Unassigned", "{#OVMM_SERVER_SERVERPOOL_NAME}":"Unassigned", "{#OVMM_SERVER_LOCAL_FILESERVER_ID}":"0004fb00000900001a95d04b1b89d455", "{#OVMM_SERVER_LOCAL_FILESERVER_NAME}":"Local FS ovm01.domain.be"}]}'

9) observe the hosgroup changes

10) run the first discovery again

11) confirm you recieve LLD error.

P.S. it may be possible that hosts are overlaing from your real OVM host with our test host. In this case either the "Unlink template and clear" procedure must be executed from the original ovm host or we must rename {#OVMM_SERVER_ID} and {#OVMM_SERVER_NAME} values in our discovery.

Regards,

|

|

Hello Aigars

I executed your instructions.

I indeed had to rename {#OVMM_SERVER_ID} and {#OVMM_SERVER_NAME} values but also the {#OVMM_SERVER_SERVERPOOL_ID} and {#OVMM_SERVER_SERVERPOOL_NAME}. When I first tried with the original serverpool_id/_name the host was just not added to that group.. (I assume because the original groups are still managed by the original LLD rule? I didn't know I'm unable to use the same dynamic group name in two or more separate discovery rules .. I do can manually add other hosts into such a LLD created group)

Anyway, with all 4 of those macro's changed I discovered 'ovm01test', using zabbix-sender, first in TestPooltest, then in Unassignedtest, then back to TestPooltest as you instructed, and indeed, this gives no errors and just works as expected, no matter how many times I repeated this..

When I do this with my original template and hosts, i persistently get the error when moving from TestPool to Unassigned and then back to TestPool..Then the error keeps on repeating every LLD run, until I manually delete that one discovered host, so it gets re-discovered, back in TestPool.

I don't really see why the test-setup can't simulate the problem I see with the original setup..

I even tried changing your template to call a zabbix Agent userparameter "ovm.test.discovery[*]" which calls a small bash script that alternates in returning the first and the second discovery-data-string each time it is executed. I then assigned the IP address of the real ovmm server to testovm. So the testovm host now contacts the same zabbix Agent, through the same proxy and receives a discovery string alternating the serverpool between TestPooltest and Unassignedtest each check. So now I see this ovm01test machine change groups every 30s without problems..

I have no clue why this works and why the original setup still doesn't work after alternating the serverpool. The only difference I currently see is that there are more discovered hosts in the original setup.. So I manually added testovm also to TestPooltest, but stil this setups keeps on working..

Any idea what to check next ?

|

|

Hello Robin,

Thanks for the investigation.

Host prototype groups with the same name from different OVMs platforms are not allowed.

1) It is worth trying to switch LLD rule from "Zabbix agent" to "Zabbix agent (active)" to boost the LLD delivery a bit. In this case make sure Host name for the OVM server match 1:1 with the "Hostname=" in zabbix_agentd.conf on OVM server.

2) We can capture the actual LLD sample and execute on test-setup. Please log inside proxy

# apt-get install zabbix-get

# yum install zabbix-get

zabbix_get -s ip.on.ovm.host -p 10050 -k 'ovmm.discovery["Server"]'

# move the host to second group

# wondering what is the execution time of LLD

time zabbix_get -s ip.on.ovm.host -p 10050 -k 'ovmm.discovery["Server"]'

Either you can attach the LLD cycles in attachment or bring up another Zabbix server

https://www.zabbix.com/download_appliance and test it without proxy. username: appliance, password: zabbix.

3) What database type you are running on proxy?

Regards,

|

|

Hello Aigars

1) true, but I assume that won't change anything in this problem ?

2) I performed a cycle 'TestPool' -> 'Unassigned' -> 'TestPool' and logged the LLD output in attached file. The execution time fluctuates between 400ms to 1.100ms with an average around 600ms

3) The proxy is using a SQLite db

|

|

Hello Robin,

It is nice to try to reproduce the problem with the same data.  Thanks! Thanks!

You got a very tidy environment there. I tested all those cycles via Zabbix trapper. I got SQLite3 on the proxy as well. I did not receive an error on the third (move back) cycle.

LLD Item type "Zabbix agent (active)" works in a similar manner as Zabbix trapper item, so please switch your LLD item to "Zabbix agent (active)"

What is NVPS (new values per second) on the proxy database? This can be seen under Monitoring -> Proxies.

Any suspicious warning inside zabbix_proxy.log. Would you mind to attach it if active agent does not help?

Regards,

|

|

Hello robinr

Did you try to switch LLD to the Item type "Zabbix agent (active)"? What were the results?

|

|

Hi Aigars

I have switched the LLD rule to type Zabbix Agent (Active) then again performed the OVM migration back and forth. Unfortunately the error again started showing up in the zabbix.log after the migration back to the original server pool.

The NVPS for the proxy are 8.54

There are no relevant messages whatsoever in the zabbix proxy logging..

|

|

Hello Robin,

I'm almost closing this case because of unable to reproduce, but..

if you really want to invest, then you must debug a "trapper" process inside Zabbix server. What you need to do is:

- Set LLD interval to 30 seconds, wait one minute

- Reload the config cache for zabbix_proxy

- Open ssh session in Zabbix server

- Empty log file '> /var/log/zabbix/zabbix_server.log'

- Increase trapper verbosity to level 4

zabbix_server -R log_level_increase="trapper"

- do your scenario. Make sure to recieve an error on the frontend.

- stop the debugging

zabbix_server -R log_level_decrease="trapper"

- copy your log file somewhere else so it gets not rotated

cp /var/log/zabbix/zabbix_server.log ~

Now you can look inside a log file an seek for the line which contains

trapper got '{"request":"proxy data","host":"YourProxyNameHere",...

Find the trapper got line which contains your LLD rule (search by MACRO name from LLD)!

It will contain a lot of information (some values received from hosts + LLD rules). After this line, there will be a long slow-motion view of how the values get stored in places and how the LLD gets preprocessed. Look on to next 1000 lines. There will be a line containing:

and after this line there will be four lines per each item similar to:

10736:20181012:144211.950 In substitute_key_macros() data:'log.key[{#LOGFILEPATH}]'

10736:20181012:144211.950 In substitute_lld_macros() data:'{#LOGFILEPATH}'

10736:20181012:144211.950 End of substitute_lld_macros():SUCCEED data:'/var/log/daemon.log'

10736:20181012:144211.950 End of substitute_key_macros():SUCCEED data:'log.key[/var/log/daemon.log]'

The error must be there! If the error does not provide any useful information you must repeat the steps using debug level 5 by running 2x the command:

zabbix_server -R log_level_increase="trapper"

P.S. Be careful with LLD rule containing a 30s interval. Remember to set it back to the normal period after the test is over.

Regards,

|

|

Hi Aigars

I redid the sequence with debug level 4 and then again with level 5.. but on both levels, I fail to find anything relevant in the logging that could be a clue to the problem.

I see the discovery, i see the correct macro substitutions happening, I see the host changing host group (first time) and the second time I see that it fails during the host group change due to the duplicate key because it tries to add the host to the static group next to the macro-resolved group..

In the first discovery (TestPool -> Unassigned) , zabbix only adds the host to hostgroup 70 (Unassigned) and deletes it from group TestPool (assuming that hostgroupid 937 is referencing to this association). The host is already in the static group "Oracle VM/Hypervisors" (group id: 37) and this does not change:

insert into hosts_groups (hostgroupid,hostid,groupid) values (1019,10577,70);

delete from hosts_groups where hostgroupid=937

In the second discovery (Unassigned -> TestPool), zabbix adds the host to hostgroup 25 (TestPool) but now suddenly also wants to add it to group 37 (Oracle VM/Hypervisors) where the host is already in.. Hence the duplicate key..

insert into hosts_groups (hostgroupid,hostid,groupid) values (1024,10669,37),(1025,10669,25);

[Z3005] query failed: [1062] Duplicate entry '10669-37' for key 'hosts_groups_1'

I see no reason why zabbix would try to add the host to this group 37 as the host is already in it, and has never gone out of it...

I'll attach parts of the logfile: zabbix_server.logparts which I think are relevant, maybe you see something in it that may lead us somewhere ? which I think are relevant, maybe you see something in it that may lead us somewhere ?

|

|

Hello Robin,

Thank you very much for investigation.

I'm almost passing this to the dev team, but to provide more value to a community I have to ask you for a huge favour. Since 4.0 is out and 3.4 is out of support, please update your server and proxy to 4.0. Here are some notes on how to update:

# create a backup of database

# double check the backup is OK and placed in safe place

# make sure you have free disk space

# remove old repository

yum remove zabbix-release

# install new repository

rpm -ivh http:

# clear yum cache

yum clean all

# in MySQL case use

yum update zabbix-web zabbix-web-mysql zabbix-server-mysql

# make sure zabbix server is running

systemctl status zabbix-server

# if server is not running check SELinux

# make sure there is no errors inside log

tail -500 /var/log/zabbix/zabbix_server.log

# Remember to clear the web browser cache!

Please pay extra attention to how to rollup backup as well  Maybe the fastest and safest thing is to do a cold database backup. Maybe the fastest and safest thing is to do a cold database backup.

Regards,

|

|

Hello Aigars

Meanwhile I updated the server, proxy and agent to v4.0 and repeated the sequence.. However, the problem remains exactly the same:

13898:20181016:151941.789 [Z3005] query failed: [1062] Duplicate entry '10688-37' for key 'hosts_groups_1' [insert into hosts_groups (hostgroupid,hostid,groupid) values (1078,10688,37),(1079,10688,25)

|

|

I am sorry but we are having trouble in reproducing this issue, is it possible to reproduce issue from a clean installation with some simple steps and trapper item ?

Is it possible for you to try out this patch ?

Index: src/libs/zbxdbhigh/lld_host.c

===================================================================

--- src/libs/zbxdbhigh/lld_host.c (revision 86436)

+++ src/libs/zbxdbhigh/lld_host.c (working copy)

@@ -773,6 +773,8 @@

host = (zbx_lld_host_t *)hosts->values[i];

+ zbx_vector_uint64_sort(&host->new_groupids, ZBX_DEFAULT_UINT64_COMPARE_FUNC);

+

if (FAIL == (i = zbx_vector_uint64_bsearch(&host->new_groupids, groupid,

ZBX_DEFAULT_UINT64_COMPARE_FUNC)))

{

|

|

I can reproduce error with some small code manipulations.

patch to reproduce

Index: src/libs/zbxdbhigh/lld_host.c

===================================================================

--- src/libs/zbxdbhigh/lld_host.c (revision 86436)

+++ src/libs/zbxdbhigh/lld_host.c (working copy)

@@ -724,7 +724,10 @@

zbx_vector_uint64_reserve(&host->new_groupids, groupids->values_num);

for (j = 0; j < groupids->values_num; j++)

+ {

+ zabbix_log(LOG_LEVEL_INFORMATION, "groupids->values[j]:" ZBX_FS_UI64, groupids->values[j]);

zbx_vector_uint64_append(&host->new_groupids, groupids->values[j]);

+ }

if (0 != host->hostid)

zbx_vector_uint64_append(&hostids, host->hostid);

@@ -740,7 +743,7 @@

for (j = 0; j < group->hosts.values_num; j++)

{

host = (zbx_lld_host_t *)group->hosts.values[j];

-

+ zabbix_log(LOG_LEVEL_INFORMATION, "group->groupid:" ZBX_FS_UI64, group->groupid);

zbx_vector_uint64_append(&host->new_groupids, group->groupid);

}

}

@@ -756,7 +759,7 @@

" where");

DBadd_condition_alloc(&sql, &sql_alloc, &sql_offset, "hostid", hostids.values, hostids.values_num);

- result = DBselect("%s", sql);

+ result = DBselect("%s order by groupid desc", sql);

zbx_free(sql);

@@ -773,6 +776,13 @@

host = (zbx_lld_host_t *)hosts->values[i];

+ + zabbix_log(LOG_LEVEL_INFORMATION, "host hostid" ZBX_FS_UI64, hostid);

+ for (int g = 0; g < host->new_groupids.values_num; g++)

+ {

+ zabbix_log(LOG_LEVEL_INFORMATION, "tic:" ZBX_FS_UI64, host->new_groupids.values[g]);

+ }

+

if (FAIL == (i = zbx_vector_uint64_bsearch(&host->new_groupids, groupid,

ZBX_DEFAULT_UINT64_COMPARE_FUNC)))

{

Steps:

1. Create host Zabbix server

2. Create hostgroup 5

3. create host prototype

{#INDEX} with group 5 and group prototype {#INDEX}

3. Start discovery

./bin/zabbix_sender -v -z 127.0.0.1 -p 10051 -s "Zabbix server" -k "trap1" -o '{"data":[{"{#INDEX}":"test", "{#VALUE}":"0"}]}'

4. Now create host group 7 and replace group 5 to 7, then start discovery with sender again

10566:20181113:133337.477 groupids->values[j]:18

10566:20181113:133337.481 group->groupid:17

10566:20181113:133337.489 host hostid10265

10566:20181113:133337.490 tic:18

10566:20181113:133337.491 tic:17

10566:20181113:133337.494 host hostid10265

10566:20181113:133337.494 tic:18

10566:20181113:133337.495 tic:17

10566:20181113:133337.586 [Z3005] query failed: [1062] Duplicate entry '10265-18' for key 'hosts_groups_1' [insert into hosts_groups (hostgroupid,hostid,groupid) values (293,10265,18);

See that binary search is used with vector that is not sorted

|

|

Fixed in development branch:

svn://svn.zabbix.com/branches/dev/ZBX-14635

|

|

Fixed in:

- pre-3.0.24rc1 r86957

- pre-4.0.2rc1 r86950,r86960

- pre-4.2.0alpha1 r86961

|

Generated at Fri Dec 19 22:09:45 EET 2025 using Jira 10.3.13#10030013-sha1:56dd970ae30ebfeda3a697d25be1f6388b68a422.