-

Type:

Problem report

-

Resolution: Fixed

-

Priority:

Major

-

Affects Version/s: 5.0.17

-

Component/s: Proxy (P)

-

None

-

Sprint 82 (Nov 2021), Sprint 83 (Dec 2021), Sprint 84 (Jan 2022)

-

0.5

Happens to one of our customers, linked.

Zabbix passive proxy v5.0.17 enters continuous restart due to high memory usage. The same happened to 4.4.10 proxy version. Proxy/Server DB: PostgreSQL

Log entries:

1046220:20211117:103010.650 Got signal [signal:15(SIGTERM),sender_pid:632823,sender_uid:0,reason:0]. Exiting ... 632909:20211117:103029.641 Starting Zabbix Proxy (passive) []. Zabbix 5.0.17 (revision 0a4ac3dabc). 632909:20211117:103029.641 **** Enabled features **** 632909:20211117:103029.641 SNMP monitoring: YES 632909:20211117:103029.641 IPMI monitoring: YES 632909:20211117:103029.641 Web monitoring: YES 632909:20211117:103029.641 VMware monitoring: YES 632909:20211117:103029.641 ODBC: YES 632909:20211117:103029.641 SSH support: YES 632909:20211117:103029.641 IPv6 support: YES 632909:20211117:103029.641 TLS support: YES 632909:20211117:103029.641 ************************** 632909:20211117:103029.641 using configuration file: /etc/zabbix/zabbix_proxy.conf 632909:20211117:103029.719 current database version (mandatory/optional): 05000000/05000004 632909:20211117:103029.719 required mandatory version: 05000000 632909:20211117:103029.761 proxy #0 started [main process] 632914:20211117:103029.762 proxy #1 started [trapper #1] 632922:20211117:103039.177 proxy #2 started [trapper #2] 632923:20211117:103039.177 proxy #3 started [trapper #3] 632924:20211117:103039.177 proxy #4 started [trapper #4] 632928:20211117:103039.180 proxy #8 started [trapper #8] 632929:20211117:103039.180 proxy #9 started [trapper #9]

Most likely proxy trapper processes are to blame.

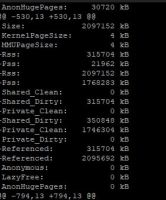

4.4.10 proxy smaps result:

pollers:

1 Pss: 2421 kB 3 Pss: 2422 kB 9 Pss: 2459 kB 7 Pss: 2461 kB 1 Pss: 2462 kB 1 Pss: 2548 kB 1 Pss: 3332 kB 2 Pss: 3376 kB 1 Pss: 3432 kB 1 Pss: 3444 kB 52 Pss: 3448 kB

trappers:

Pss: 2246 kB Pss: 10787 kB Pss: 10794 kB Pss: 10827 kB Pss: 10844 kB Pss: 10846 kB Pss: 10849 kB Pss: 10849 kB Pss: 10926 kB Pss: 11096 kB Pss: 11451 kB Pss: 11516 kB Pss: 11532 kB Pss: 11928 kB Pss: 20381 kB Pss: 29688 kB Pss: 848416 kB Pss: 852292 kB Pss: 880252 kB Pss: 886256 kB Pss: 908256 kB Pss: 978568 kB Pss: 1000952 kB Pss: 1021984 kB Pss: 1024236 kB Pss: 1025476 kB Pss: 1027016 kB Pss: 1027092 kB

Some screenshots attached.

OS:

p0infm-ap5103lv ~ $ uname -a Linux 4.18.0-240.10.1.el8_3.x86_64 #1 SMP Wed Dec 16 03:30:52 EST 2020 x86_64 x86_64 x86_64 GNU/Linux p0infm-ap5103lv ~ $ cat /etc/*release* cat: /etc/lsb-release.d: Is a directory NAME="Red Hat Enterprise Linux" VERSION="8.3 (Ootpa)" ID="rhel" ID_LIKE="fedora" VERSION_ID="8.3" PLATFORM_ID="platform:el8" PRETTY_NAME="Red Hat Enterprise Linux 8.3 (Ootpa)" ANSI_COLOR="0;31" CPE_NAME="cpe:/o:redhat:enterprise_linux:8.3:GA" HOME_URL="https://www.redhat.com/" BUG_REPORT_URL="https://bugzilla.redhat.com/" REDHAT_BUGZILLA_PRODUCT="Red Hat Enterprise Linux 8" REDHAT_BUGZILLA_PRODUCT_VERSION=8.3 REDHAT_SUPPORT_PRODUCT="Red Hat Enterprise Linux" REDHAT_SUPPORT_PRODUCT_VERSION="8.3" Red Hat Enterprise Linux release 8.3 (Ootpa) Red Hat Enterprise Linux release 8.3 (Ootpa) cpe:/o:redhat:enterprise_linux:8.3:ga

Some recent memory usage graphs:

Caches:

Checks:

select delay,key_,count(*) from items where flags = 1 group by delay, key_ order by count(*) desc;

-----

delay | key_ | count

-------+------------------------------------------------------------------------------------------------------------------------------+-------

1h | vfs.fs.discovery | 7148

1h | net.if.discovery | 7096

0 | vfs.dev.discovery | 656

1h | vmware.hv.datastore.discovery[{$URL},{HOST.HOST}] | 291

1d | memoryStatus-[{#SNMPVALUE}] | 231

1d | cpuStatus-[{#SNMPVALUE}] | 231

1d | raidControllerStatus-[{#SNMPVALUE}] | 231

1d | powerSupplyStatus-[{#SNMPVALUE}] | 231

1d | hardDiskStatus-[{#SNMPVALUE}] | 231

1h | system.run["{$CMD}"] | 183

1d | psu.discovery | 147

1d | ProcEnum | 143

1d | array.cache.discovery | 143

1d | MemoryEnum | 143

1d | physicaldisk.discovery | 143

1d | physicaldisk.arr.discovery | 143

30m | jmx.get[beans,"jboss.as:subsystem=datasources,data-source=*,statistics=pool"] | 131

1m | jmx.get[beans,"jboss.as.expr:deployment=*"] | 131

30m | jmx.get[attributes,"jboss.as:management-root=server,*"] | 131

30m | jmx.get[beans,"jboss.as:subsystem=undertow,server=*,http-listener=*"] | 131

30m | jmx.get[beans,"jboss.as:subsystem=datasources,data-source=*,statistics=jdbc"] | 131

30m | jmx.get[beans,"*:type=GarbageCollector,name=*"] | 131

30m | jmx.get[beans,"*:type=MemoryPool,name=*"] | 131

1h | system.run[df | awk '{print $7}'] | 59

1h | system.run[lsdev | grep fcs | awk '{print $1}'] | 59

1h | pgsql.discovery.db["{$PG.HOST}","{$PG.PORT}","{$PG.USER}","{$PG.DB}","{$PG.USER.PASSWD}"] | 42

1h | system.run[df | grep /pg | awk '{print $6}'] | 42

1h | pgsql.discovery.replica["{$PG.HOST}","{$PG.PORT}","{$PG.USER}","{$PG.DB}","{$PG.USER.PASSWD}"] | 42

1h | system.run[cat /var/lib/zabbix/postgresql/pg_service.conf] | 32

1h | system.run[sudo $(sudo -l | grep asmcmd | cut -d' ' -f7 | sort -u) lsdg --suppressheader | awk '{print $14}' | sed 's/\///'] | 8

1h | system.run[df | awk '{print $6}'] | 8

0 | arangodb.lld.agent | 6

0 | arangodb.lld.coordinator | 6

1h | system.run[df | egrep '{$KAFKA_FS}' | cut -d' ' -f1 | sed 's/ //' | sed 's/\/dev\/mapper\///'] | 6

1h | system.run[df | egrep '{$KAFKA_FS}' | cut -d'%' -f2 | sed 's/ //'] | 6

0 | arangodb.lld.dbserver | 6

3600 | CstEntry | 5

5m | ssh.run[zookeeper] | 5

3600 | DstEntry | 5

3600 | citEntry | 5

3600 | hypervisorEntry | 5

1d | physicalDisk.discovery | 4

1d | memory.discovery | 4

1h | system.run[df | grep /app | cut -d'%' -f2 | sed 's/ //'] | 3

1h | vfs.file.contents["/var/lib/zabbix/custom_dns.txt"] | 1

1h | vmware.datastore.discovery[{$URL}] | 1

1h | system.run[df -h | egrep '/usr/sap|/hana' | awk '{print $6}'] | 1

1h | system.run[zpool list -H | awk '{print $1}'] | 1

1h | vmware.vm.discovery[{$URL}] | 1

0 | ogg.process.discovery | 1

1d | vmware.cluster.discovery[{$URL}] | 1

1d | vmware.hv.discovery[{$URL}] | 1

(52 rows)