-

Type:

Problem report

-

Resolution: Fixed

-

Priority:

Trivial

-

Affects Version/s: 6.0.3

-

Component/s: Installation (I), Server (S)

-

None

-

Environment:Ubuntu 20 EC2 instance in AWS, running LXD with (1) Galera DB node and (1) Server Instance. (3) Unique AZs

-

Sprint 90 (Jul 2022)

-

0.5

Steps to reproduce:

- Upgrade from 5.4.9 direct to 6.0.3

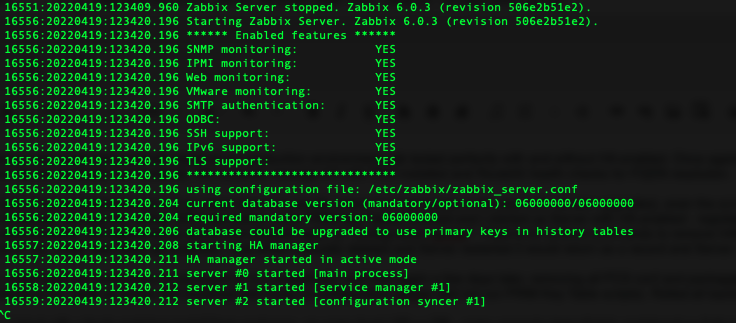

- Upon Server restart DB upgrades successfully, notes PRIM Key changes

- Services/processes hang after HA Node check on "configuration syncer"

Result:

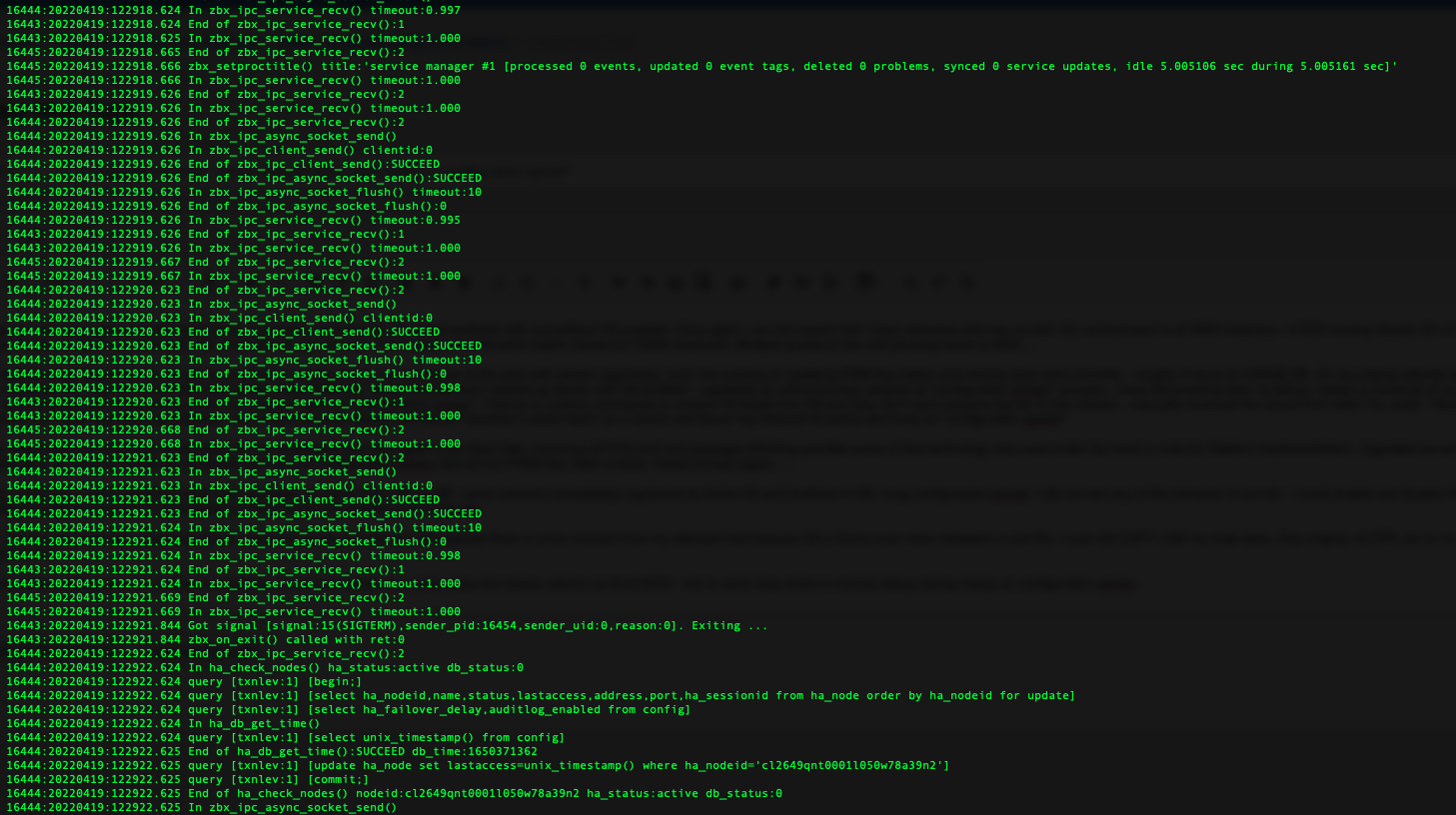

Screenshots of Server Log attached, Forum post made with no replies of similar scenarios. Screenshots are with all HA commands commented out in conf file, in fact its not even 6 package conf file, still 5.4.9 with none of the conf lines there for HA configuration.

Summary Description:

Our environment is all AWS instances - 3 EC2 running Ubuntu 20 LXD, each EC2 runs a DB container (galera cluster) and Zabbix Server container (w/ frontend). We currently manage HA with PCS installed and Route53 health checks for FQDN resolution. Multiple proxies in the wild phoning home to AWS.....

Upgrade of actual database was not an issue (which is usually where I've had issues in the past with version upgrades), even the scripting of updating PRIM Key tables and moving data went smoothly - roughly 4 hours for 200GB DB. On my original attempt to upgrade - I shut down all PCS standby nodes, 2 of the 3 EC2 instances. So only the 'active' EC2 was online, once PRIM Key Tables finished and I started up Server with HA enabled - registered as active but then stopped at "configuration syncer" process - never did anything else, no failure, restart or continue on to rest of process/services for Server to run. Disabled HA in conf and restarted, still registered as active and stopped again at "configuration syncer". Tried to to runtime commands to remove HA Node from DB and they don't work because Server is not fully started....manually removed the record from table "ha_node". Restarted service and still registered as HA Active but now the record in "ha_node" was listed as localhost - and when manually deleted and Server restarted it would return as a record and Server log showed HA active and hung on "configuration syncer".

I fully rolled back our production environment to 5.4 (snapshots) and tried again a few days later, removing all PCS conf and packages (thinking possible some of that technology was used under the hood in code for Zabbix's implementation). Upgraded server to 6.0 again with no HA enabled in conf and it immediately registered to ha_node as Active with localhost and hung on configuration syncer (did not run PRIM Key Table scripts). Rolled all back again......

Today I tried with a fresh container install from packages, on same host LXD as DB - same scenario immediately registered as Active HA and localhost in DB, hung configuration syncer. I did not see any of this behavior in our lab - I could enable and disable HA and Server behaved as expected.

Now I'm at a stand still with upgrading, Server never fully boots and after the DB upgrade there is some remnant from my attempts that believes HA is Active even when disabled in conf file. I even did COPY LIKE ha-node table, drop original, ALTER, etc to no change........

Of note when I debug level 4 in Server, it's constantly in a loop of checkin ha_node status but always returns as SUCCEED - this is same loop behind the scenes where in normal log startup services hang on configuration syncer

Expected:

Services/processes start as normal, fully booting server