-

Type:

Problem report

-

Resolution: Duplicate

-

Priority:

Trivial

-

None

-

Affects Version/s: 6.0.4

-

Component/s: Server (S)

-

None

-

Environment:Oracle Linux 8, PostgreSQL 13.6, Timescale 2.3.1

This issue occurred after update from 5.0.17 to 6.0.4

Diaginfo

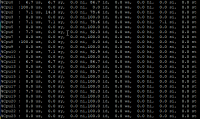

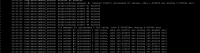

== history cache diagnostic information == Items:0 values:0 time:0.000023 Memory.data: size: free:1073741440 used:0 chunks: free:1 used:0 min:1073741440 max:1073741440 buckets: 256+:1 Memory.index: size: free:1073369232 used:372080 chunks: free:3 used:5 min:8072 max:1072532296 buckets: 256+:3 Top.values: == == preprocessing diagnostic information == Values:403502 done:361959 queued:0 processing:1314 pending:40229 time:2.981349 Top.values: itemid:1296943 values:108 steps:0 itemid:3276248 values:99 steps:0 itemid:3382122 values:99 steps:0 itemid:3380145 values:99 steps:0 itemid:1402337 values:99 steps:0 itemid:3276229 values:99 steps:0 itemid:1296945 values:99 steps:0 itemid:1056895 values:99 steps:0 itemid:1402346 values:99 steps:0 itemid:2014796 values:99 steps:0 itemid:3276268 values:99 steps:0 itemid:2078554 values:98 steps:0 itemid:5488727 values:50 steps:2 itemid:5488736 values:50 steps:1 itemid:5488737 values:50 steps:3 itemid:5488739 values:50 steps:3 itemid:5488740 values:50 steps:2 itemid:5488747 values:50 steps:1 itemid:5488748 values:50 steps:1 itemid:5488753 values:50 steps:1 itemid:5488757 values:50 steps:2 itemid:5488761 values:50 steps:1 itemid:1410359 values:50 steps:1 itemid:1410374 values:50 steps:2 itemid:1410377 values:50 steps:2 Top.oldest.preproc.values: == == locks diagnostic information == Locks: ZBX_MUTEX_LOG:0x7f5d60c33000 ZBX_MUTEX_CACHE:0x7f5d60c33028 ZBX_MUTEX_TRENDS:0x7f5d60c33050 ZBX_MUTEX_CACHE_IDS:0x7f5d60c33078 ZBX_MUTEX_SELFMON:0x7f5d60c330a0 ZBX_MUTEX_CPUSTATS:0x7f5d60c330c8 ZBX_MUTEX_DISKSTATS:0x7f5d60c330f0 ZBX_MUTEX_VALUECACHE:0x7f5d60c33118 ZBX_MUTEX_VMWARE:0x7f5d60c33140 ZBX_MUTEX_SQLITE3:0x7f5d60c33168 ZBX_MUTEX_PROCSTAT:0x7f5d60c33190 ZBX_MUTEX_PROXY_HISTORY:0x7f5d60c331b8 ZBX_MUTEX_MODBUS:0x7f5d60c331e0 ZBX_MUTEX_TREND_FUNC:0x7f5d60c33208 ZBX_RWLOCK_CONFIG:0x7f5d60c33230 ZBX_RWLOCK_VALUECACHE:0x7f5d60c33268 == == value cache diagnostic information == Items:0 values:0 mode:-1 time:0.000000 Memory: size: free:0 used:0 chunks: free:0 used:0 min:0 max:0 buckets: Top.values: Top.request.values: == == LLD diagnostic information == Rules:0 values:0 time:0.000134 Top.values: == == alerting diagnostic information == Alerts:0 time:0.000140 Media.alerts: Source.alerts: ==

Steps to reproduce:

- Update Zabbix from 5.0 to 6.0 (just server, proxy hasn't problem)

- Watch for the preprocessing manager

Result:

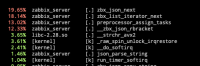

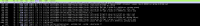

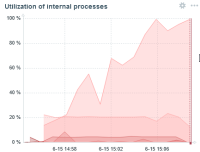

Preprocessing manager queue is growing up, after that manager starts using CPU on 100% (and 1 worker uses CPU on 100% of 50 workers, see screenshots) and all zabbix checks are down (e.g. Zabbix agent is DOWN, manual check says UP, but Zabbix marks host is unreachable). When queue is full - OOM kills zabbix-server.

- duplicates

-

ZBX-20590 preprocessing worker utilization

-

- Closed

-