-

Type:

Problem report

-

Resolution: Won't fix

-

Priority:

Trivial

-

None

-

Affects Version/s: 7.0.2

-

Component/s: Server (S)

-

None

-

Environment:Docker version 27.0.3, build 7d4bcd8

Ubuntu 22.04.4

AMD EPYC 7302P 16-Core Processor (4 core)

8 GB ram

Steps to reproduce:

- Migrated from Zabbix 6.0 LTS to 7.0 LTS (7.0.2) (the latter inside a docker container)

- Start zabbix server

- Have some HTTP Agent poller items

- Monitor HTTP Agent Poller CPU usage

Information:

The issue has been recreated with the same configuration across multiple hosts and separated environments.

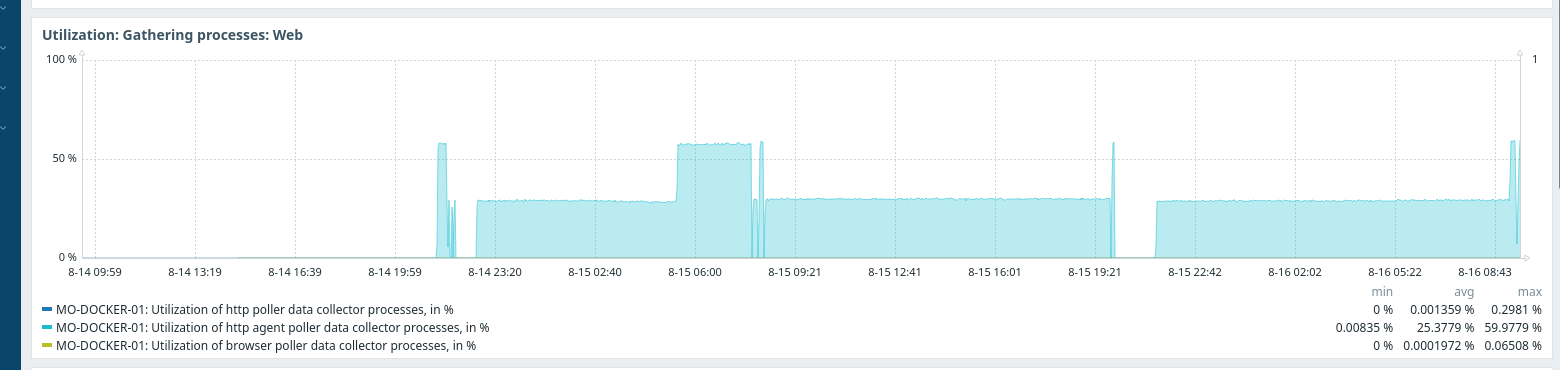

The system can be up multiple hours performing the exact same workload before encountering the issue.

Strace on the HTTP Agent Poller shows a large amount of the following events at the time of consuming t he CPU.

epoll_pwait(15, [], 32, 0, NULL, 8) = 0

times(NULL) = 1739159558

epoll_pwait(19, [\{events=EPOLLIN, data={u32=29, u64=29}}, \{events=EPOLLIN, data={u32=30, u64=30}}, \{events=EPOLLIN, data={u32=27, u64=27}}], 32, 265, NULL, 8) = 3

times(NULL) = 1739159558

epoll_pwait(15, [], 32, 0, NULL, 8) = 0

times(NULL) = 1739159558

epoll_pwait(19, [\{events=EPOLLIN, data={u32=29, u64=29}}, \{events=EPOLLIN, data={u32=30, u64=30}}, \{events=EPOLLIN, data={u32=27, u64=27}}], 32, 265, NULL, 8) = 3

times(NULL) = 1739159558

epoll_pwait(15, [], 32, 0, NULL, 8) = 0

times(NULL) = 1739159558

epoll_pwait(19, [\{events=EPOLLIN, data={u32=29, u64=29}}, \{events=EPOLLIN, data={u32=30, u64=30}}, \{events=EPOLLIN, data={u32=27, u64=27}}], 32, 265, NULL, 8) = 3

times(NULL) = 1739159558

epoll_pwait(15, [], 32, 0, NULL, 8) = 0

times(NULL) = 1739159558

epoll_pwait(19, [\{events=EPOLLIN, data={u32=29, u64=29}}, \{events=EPOLLIN, data={u32=30, u64=30}}, \{events=EPOLLIN, data={u32=27, u64=27}}], 32, 265, NULL, 8) = 3

times(NULL) = 1739159558

Getting the processes back to normal cpu usage only works by restarting the zabbix server processes.

Memory usage is low.

Result:

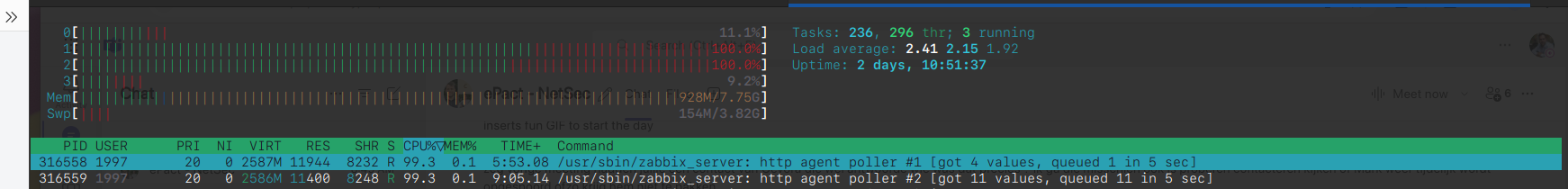

Anywhere between 1 minute after running up to (observed) multiple hours of uptime the HTTP Agent Poller process starts consuming an entire CPU core and never goes back to normal load. Load on the previous installation (same hardware / OS) was negligible.

Initially, just one process of the HTTP Agent Pollers starts peaking at 100%, usually after a while, any other HTTP Agent Poller processes follow and start consuming the entire core.