-

Type:

Change Request

-

Resolution: Fixed

-

Priority:

Blocker

-

Affects Version/s: 6.2.0

-

Component/s: Server (S)

-

Sprint 91 (Aug 2022), Sprint 92 (Sep 2022), Sprint 93 (Oct 2022)

-

2

Server version 5.0.20, using Postgresql.

So history syncers may be not so fast because of evaluating triggers, where is lack of history values in value cache and it needs to read history form database, which sometimes takes a few seconds.

On a bigger installations (NVPS ~26K), 30 syncers started, syncers are busy by waiting response from an SQLs, see below.

All timestamps in server log are in UTC timezone!

At the time of collecting debug and strase, server experienced serious performance issues. Data on graphs was with ~40-60+ minutes delay.

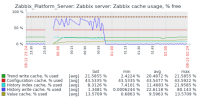

Value cache on the server is 16G, used less than 10%.

All 30 syncers are busy all the time and we always have exactly 30 SQLs running (1-2-3 seconds long) when selecting data for week period, like this:

select clock,ns,value from history_uint where itemid=9305898 and clock<=1655747049 and clock>1655142249 order by clock desc limit 3

Latest data (history collected by particular itemIDs) was taken next day after debug and strace collection.

All considered items below do not have preprocessing, type active agent, 1m update interval.

Looking on value's timestamps in debug section, looks like server was syncing the values and evaluating related expression with ~75 minutes delay.

Usually, when server health is fine, graph of value cache misses is very low - 0-1vps, but at the time of debug/strace collection, misses were frequently peaking to xK-xxK vps.

Here a few different examples:

From Debug log:

fast expression evaluation without SQLs - value cache is used as should be ideally:

13420:20220621:200135.121 In evaluate_function() function:'hkg-p01-r09-svr09.xx.xxxx.xx:proc.num[mmr,xxxxapp,,/opt/ssb/bin/mmr].avg(#3)' 13420:20220621:200135.121 In evaluate_AVG() 13420:20220621:200135.121 In get_function_parameter_int() parameters:'#3' Nparam:1 13420:20220621:200135.121 get_function_parameter_int() type:num value:3 13420:20220621:200135.121 End of get_function_parameter_int():SUCCEED 13420:20220621:200135.121 In zbx_vc_get_values() itemid:4865850 value_type:3 seconds:0 count:3 sec:1655837090 ns:675046415 13420:20220621:200135.121 End of zbx_vc_get_values():SUCCEED count:3 cached:1 13420:20220621:200135.121 End of evaluate_AVG():SUCCEED 13420:20220621:200135.121 End of evaluate_function():SUCCEED value:'1'

value timestamp 1655837090 -> 2022-06-21 18:44:50 UTC

Host above is monitored by another proxy #2 (which had not issues), active agent, 1m update interval, the itemid: 4865850 history, which has good debug section - only value cache used:

2022-06-21 20:01:57 UP (1) 2022-06-21 20:00:57 UP (1) 2022-06-21 19:59:57 UP (1) 2022-06-21 19:58:57 UP (1) 2022-06-21 19:57:57 UP (1) 2022-06-21 19:56:57 UP (1) 2022-06-21 19:55:57 UP (1) 2022-06-21 19:54:57 UP (1) 2022-06-21 19:53:57 UP (1) 2022-06-21 19:52:57 UP (1) 2022-06-21 19:51:57 UP (1) 2022-06-21 19:50:57 UP (1) 2022-06-21 19:49:56 UP (1) 2022-06-21 19:48:57 UP (1)

fast evaluation, but with SQLs (not ideal behavior) becasue of lack data in value cache:

13420:20220621:200122.134 In evaluate_function() function:'sjc-p07-r26-svr12.xx.xxxx.xx:proc.num[mmr-4,xxxxapp,,/opt/ssb/bin/mmr-4].avg(#3)' 13420:20220621:200122.134 In evaluate_AVG() 13420:20220621:200122.134 In get_function_parameter_int() parameters:'#3' Nparam:1 13420:20220621:200122.134 get_function_parameter_int() type:num value:3 13420:20220621:200122.134 End of get_function_parameter_int():SUCCEED 13420:20220621:200122.134 In zbx_vc_get_values() itemid:9305577 value_type:3 seconds:0 count:3 sec:1655837090 ns:439279268 13420:20220621:200122.134 In zbx_history_get_values() itemid:9305577 value_type:3 start:1655837090 count:0 end:2145916800 13420:20220621:200122.134 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9305577 and clock>1655837090] 13420:20220621:200122.136 End of zbx_history_get_values():SUCCEED values:0 13420:20220621:200122.136 In zbx_history_get_values() itemid:9305577 value_type:3 start:0 count:4 end:1655837090 13420:20220621:200122.136 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9305577 and clock<=1655837090 and clock>1655833490 order by clock desc limit 4] 13420:20220621:200122.138 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9305577 and clock<=1655833490 and clock>1655747090 order by clock desc limit 3] 13420:20220621:200122.141 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9305577 and clock<=1655747090 and clock>1655142290 order by clock desc limit 3] 13420:20220621:200124.945 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9305577 and clock=1655668740] 13420:20220621:200124.947 End of zbx_history_get_values():SUCCEED values:4 13420:20220621:200124.947 End of zbx_vc_get_values():SUCCEED count:3 cached:1 13420:20220621:200124.947 End of evaluate_AVG():SUCCEED 13420:20220621:200124.947 End of evaluate_function():SUCCEED value:'1'

value timestamp 1655837090 -> 2022-06-21 18:44:50 UTC

(history for this itemid was not checked)

slow SQL (worse behavior), even more than 3 seconds, so also logged as slow SQL:

13420:20220621:200135.121 In evaluate_function() function:'sjc-p07-r11-svr33.xx.xxxx.xx:proc.mem[filebeat,,,filebeat,rss].min(#5)' 13420:20220621:200135.121 In evaluate_MIN_or_MAX() 13420:20220621:200135.121 In get_function_parameter_int() parameters:'#5' Nparam:1 13420:20220621:200135.121 get_function_parameter_int() type:num value:5 13420:20220621:200135.122 End of get_function_parameter_int():SUCCEED 13420:20220621:200135.122 In zbx_vc_get_values() itemid:9210477 value_type:3 seconds:0 count:5 sec:1655837090 ns:666763482 13420:20220621:200135.122 In zbx_history_get_values() itemid:9210477 value_type:3 start:1655837090 count:0 end:2145916800 13420:20220621:200135.122 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9210477 and clock>1655837090] 13420:20220621:200135.124 End of zbx_history_get_values():SUCCEED values:0 13420:20220621:200135.124 In zbx_history_get_values() itemid:9210477 value_type:3 start:0 count:6 end:1655837090 13420:20220621:200135.124 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9210477 and clock<=1655837090 and clock>1655833490 order by clock desc limit 6] 13420:20220621:200135.126 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9210477 and clock<=1655833490 and clock>1655747090 order by clock desc limit 5] 13420:20220621:200135.128 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9210477 and clock<=1655747090 and clock>1655142290 order by clock desc limit 5] 13420:20220621:200138.204 slow query: 3.075652 sec, "select clock,ns,value from history_uint where itemid=9210477 and clock<=1655747090 and clock>1655142290 order by clock desc limit 5" 13420:20220621:200138.204 query [txnlev:1] [select clock,ns,value from history_uint where itemid=9210477 and clock=1655668644] 13420:20220621:200138.206 End of zbx_history_get_values():SUCCEED values:6 13420:20220621:200138.206 End of zbx_vc_get_values():SUCCEED count:5 cached:1 13420:20220621:200138.206 End of evaluate_MIN_or_MAX():SUCCEED 13420:20220621:200138.206 End of evaluate_function():SUCCEED value:'29560832'

value timestamp 1655837090 -> 2022-06-21 18:44:50 UTC

Proxy #1 (which had issues at the time), active agent, 1m update interval, itemid: 9210477 history:

2022-06-21 20:48:33 484999168 2022-06-21 20:47:33 484999168 2022-06-21 19:25:13 484999168 2022-06-21 19:16:54 484999168 2022-06-21 19:15:54 484999168 2022-06-21 19:14:54 484999168 2022-06-21 19:13:54 484999168 2022-06-21 19:12:54 484999168 2022-06-21 19:11:54 484999168 2022-06-21 19:10:54 484999168 2022-06-21 19:09:54 484999168

we see missing data at ~20:01:35 and long time earlier (I remeber this og graphs).

So stat analysis:

# egrep "In zbx_vc_get_values" debug -c 5146 # egrep -A1 "In zbx_vc_get_values" debug | egrep "End of zbx_vc_get_values" -c 4696 # egrep -A1 "In zbx_vc_get_values" debug | egrep "In zbx_history_get_values" -c 450

difference 450, so 450/5146*100% = 8.7% of triggers evaluation cause accessing DB history.

From strace, all cases are with SQLs of cource. As cases when value cache only is used, are not visible for starce:

fast:

1655841071.516360 sendto(6, "Q\0\0\0Qselect clock,ns,value from history where itemid=9296897 and clock>1655837049\0", 82, MSG_NOSIGNAL, NULL, 0) = 82

1655841071.516398 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841071.518541 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\242\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\242\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\242\0\3\0\0\2\275\0\10\377\377\377\377\0\0C\0\0\0\rSELECT 0\0Z\0\0\0\5T", 16384, 0, NULL, NULL) = 96

1655841071.518582 sendto(6, "Q\0\0\0\203select clock,ns,value from history where itemid=9296897 and clock<=1655837049 and clock>1655833449 order by clock d"..., 132, MSG_NOSIGNAL, NULL, 0) = 132

1655841071.518620 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841071.598917 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\242\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\242\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\242\0\3\0\0\2\275\0\10\377\377\377\377\0\0D\0\0\0(\0\3\0\0\0\n1655837049\0\0\0\t603591837\0\0\0\39.2C\0\0"..., 16384, 0, NULL, NULL) = 137

1655841071.598960 sendto(6, "Q\0\0\0\203select clock,ns,value from history where itemid=9296897 and clock<=1655833449 and clock>1655747049 order by clock d"..., 132, MSG_NOSIGNAL, NULL, 0) = 132

1655841071.598999 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841071.601594 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\242\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\242\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\242\0\3\0\0\2\275\0\10\377\377\377\377\0\0C\0\0\0\rSELECT 0\0Z\0\0\0\5T", 16384, 0, NULL, NULL) = 96

1655841071.601630 sendto(6, "Q\0\0\0\203select clock,ns,value from history where itemid=9296897 and clock<=1655747049 and clock>1655142249 order by clock d"..., 132, MSG_NOSIGNAL, NULL, 0) = 132

1655841071.601671 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841071.653261 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\242\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\242\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\242\0\3\0\0\2\275\0\10\377\377\377\377\0\0D\0\0\0(\0\3\0\0\0\n1655668854\0\0\0\t151694766\0\0\0\39.1D\0\0"..., 16384, 0, NULL, NULL) = 219

1655841071.653305 sendto(6, "Q\0\0\0Qselect clock,ns,value from history where itemid=9296897 and clock=1655668734\0", 82, MSG_NOSIGNAL, NULL, 0) = 82

1655841071.653346 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841071.655457 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\242\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\242\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\242\0\3\0\0\2\275\0\10\377\377\377\377\0\0D\0\0\0(\0\3\0\0\0\n1655668734\0\0\0\t347926364\0\0\0\39.2C\0\0"..., 16384, 0, NULL, NULL) = 137

strace timestamp 1655841071 -> 2022-06-21 19:51:11 UTC

value timestamp 1655837049 -> 2022-06-21 18:44:09 UTC

Proxy #1 (which had issues at the time), active agent, 1m update interval, itemid: 9296897 history:

2022-06-21 20:50:26 9.1 2022-06-21 20:49:26 9.2 2022-06-21 20:48:23 9.2 2022-06-21 20:47:23 9.2 2022-06-21 19:17:13 9.1 2022-06-21 19:16:12 9.2 2022-06-21 19:15:12 9.2 2022-06-21 19:14:12 9.2 2022-06-21 19:13:12 9.2

slow:

1655841060.377075 sendto(6, "Q\0\0\0Vselect clock,ns,value from history_uint where itemid=9280986 and clock>1655837049\0", 87, MSG_NOSIGNAL, NULL, 0) = 87

1655841060.377113 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841060.379189 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0C\0\0\0\rSELECT 0\0Z\0\0\0\5T", 16384, 0, NULL, NULL) = 96

1655841060.379230 sendto(6, "Q\0\0\0\210select clock,ns,value from history_uint where itemid=9280986 and clock<=1655837049 and clock>1655833449 order by cl"..., 137, MSG_NOSIGNAL, NULL, 0) = 137

1655841060.379268 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841060.381501 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0D\0\0\0/\0\3\0\0\0\n1655837049\0\0\0\t570782566\0\0\0\n163407"..., 16384, 0, NULL, NULL) = 144

1655841060.381541 sendto(6, "Q\0\0\0\210select clock,ns,value from history_uint where itemid=9280986 and clock<=1655833449 and clock>1655747049 order by cl"..., 137, MSG_NOSIGNAL, NULL, 0) = 137

1655841060.381579 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841060.383830 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0C\0\0\0\rSELECT 0\0Z\0\0\0\5T", 16384, 0, NULL, NULL) = 96

1655841060.383868 sendto(6, "Q\0\0\0\210select clock,ns,value from history_uint where itemid=9280986 and clock<=1655747049 and clock>1655142249 order by cl"..., 137, MSG_NOSIGNAL, NULL, 0) = 137

1655841060.383904 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841062.370480 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0D\0\0\0/\0\3\0\0\0\n1655668833\0\0\0\t349689921\0\0\0\n163407"..., 16384, 0, NULL, NULL) = 240

1655841062.370686 sendto(6, "Q\0\0\0Vselect clock,ns,value from history_uint where itemid=9280986 and clock=1655668713\0", 87, MSG_NOSIGNAL, NULL, 0) = 87

1655841062.370729 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841062.372921 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0D\0\0\0/\0\3\0\0\0\n1655668713\0\0\0\t831856222\0\0\0\n163407"..., 16384, 0, NULL, NULL) = 144

slow, more than 3 seconds:

1655841065.259808 sendto(6, "Q\0\0\0Vselect clock,ns,value from history_uint where itemid=9274660 and clock>1655837049\0", 87, MSG_NOSIGNAL, NULL, 0) = 87

1655841065.259845 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841065.261916 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0C\0\0\0\rSELECT 0\0Z\0\0\0\5T", 16384, 0, NULL, NULL) = 96

1655841065.261967 sendto(6, "Q\0\0\0\210select clock,ns,value from history_uint where itemid=9274660 and clock<=1655837049 and clock>1655833449 order by cl"..., 137, MSG_NOSIGNAL, NULL, 0) = 137

1655841065.262014 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841065.264110 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0D\0\0\0&\0\3\0\0\0\n1655837049\0\0\0\t574796757\0\0\0\0011C\0\0\0\r"..., 16384, 0, NULL, NULL) = 135

1655841065.264162 sendto(6, "Q\0\0\0\210select clock,ns,value from history_uint where itemid=9274660 and clock<=1655833449 and clock>1655747049 order by cl"..., 137, MSG_NOSIGNAL, NULL, 0) = 137

1655841065.264219 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841065.306610 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0C\0\0\0\rSELECT 0\0Z\0\0\0\5T", 16384, 0, NULL, NULL) = 96

1655841065.306655 sendto(6, "Q\0\0\0\210select clock,ns,value from history_uint where itemid=9274660 and clock<=1655747049 and clock>1655142249 order by cl"..., 137, MSG_NOSIGNAL, NULL, 0) = 137

1655841065.306693 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841068.748264 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0D\0\0\0&\0\3\0\0\0\n1655668858\0\0\0\t844046256\0\0\0\0011D\0\0\0&"..., 16384, 0, NULL, NULL) = 213

1655841068.748324 rt_sigprocmask(SIG_BLOCK, [HUP INT QUIT USR1 USR2 TERM], [INT QUIT USR1 USR2 TERM], 8) = 0

1655841068.748376 open("/var/log/zabbix/zabbix_server.log", O_RDWR|O_CREAT|O_APPEND, 0666) = 7

1655841068.748444 fstat(7, {st_mode=S_IFREG|0664, st_size=47364031, ...}) = 0

1655841068.748483 mmap(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7ff63fe66000

1655841068.748528 write(7, " 13420:20220621:195108.748 slow query: 3.441671 sec, \"select clock,ns,value from history_uint where itemid=9274660 and c"..., 187) = 187

1655841068.748575 close(7) = 0

1655841068.748603 munmap(0x7ff63fe66000, 4096) = 0

1655841068.748634 rt_sigprocmask(SIG_SETMASK, [INT QUIT USR1 USR2 TERM], NULL, 8) = 0

1655841068.748669 sendto(6, "Q\0\0\0Vselect clock,ns,value from history_uint where itemid=9274660 and clock=1655668738\0", 87, MSG_NOSIGNAL, NULL, 0) = 87

1655841068.748707 poll([{fd=6, events=POLLIN|POLLERR}], 1, -1) = 1 ([{fd=6, revents=POLLIN}])

1655841068.751224 recvfrom(6, "T\0\0\0K\0\3clock\0\0\0F\251\0\2\0\0\0\27\0\4\377\377\377\377\0\0ns\0\0\0F\251\0\4\0\0\0\27\0\4\377\377\377\377\0\0value\0\0\0F\251\0\3\0\0\6\244\377\377\0\24\0\4\0\0D\0\0\0&\0\3\0\0\0\n1655668738\0\0\0\t389221158\0\0\0\0011C\0\0\0\r"..., 16384, 0, NULL, NULL) = 135

strace timestamp 1655841065 -> 2022-06-21 19:51:05 UTC

value timestamp 1655837049 -> 2022-06-21 18:44:09 UTC

Proxy #1 (which had issues at the time), active agent, 1m update interval, itemid: 9274660 history:

2022-06-21 20:47:23 UP (1) 2022-06-21 19:16:57 UP (1) 2022-06-21 19:15:56 UP (1) 2022-06-21 19:14:56 UP (1) 2022-06-21 19:13:56 UP (1) 2022-06-21 19:12:56 UP (1) 2022-06-21 19:11:56 UP (1) 2022-06-21 19:10:56 UP (1) 2022-06-21 19:09:56 UP (1) 2022-06-21 19:08:56 UP (1)

Looking in the timestamp/second wraps in the strace and itemIDs changing, I'd evaluate that ~30-40% triggers evaluation have the slow SQL query (1-3 seconds long).

I've asked our devs for a reason why zabbix server 5.0.20, NVPS 26K, does not use value cache (16GB, ~10% used) to keep values older than a few hours, so then it is running selects for hour, day, week periods to get last 3-4-5 values?

Here is the answer:

Possible explanation - values are not being polled at regular intervals (for example trapper items). Value cache works by having 'active range' of values to keep in cache and older values are removed when new values come in. When getting N values from cache, it bases the active range on the oldest value timestamp (plus minute). So with irregular data input, the required values are dropped from cache and then read again from database.

If such items are used in triggers only with #N interval, then it would be possible to add another cleanup mode in value cache, that would be based on the number of values to keep.

Just in case, here is a view of different functions usage in your zabbix, to have an imagination. That ~30 seconds debug (just one of 30 syncers):

# egrep "In evaluate_function" debug | egrep -o "\.[a-z]+\(.+\)" | sort | uniq -c | sort -n 1 .last(#1) 1 .min(15m) 1 .nodata(3m) 2 .max(3m) 3 .min(3m) 3 .nodata(30m) 3 .regexp("(?i)(rts)") 7 .avg(#10) 8 .max(5m) 10 .nodata(5m) 12 .nodata(2m) 19 .avg(5m) 38 .max(15m) 38 .min(1h) 40 .max(10m) 40 .min(10m) 42 .max(#5) 46 .regexp("(424100(1|2|3|4)029|421501000(1|2|3|4))") 49 .count(#2,6) 49 .regexp("424100(1|2|3|4)007") 51 .min(#5) 60 .regexp([Rr]eserved|[Pp]ending|pss-7) 76 .timeleft(1h,,100) 116 .max(#3) 117 .regexp("(?i)(SERVER|SVR|NDR|reserved)") 145 .min(5m) 148 .avg(#3) 194 .min(#3) 286 .regexp("(?i)(itc|ixc|cxc)") 286 .regexp("(?i)(SERVER|SVR|svr|reserved|spare|bigdata)") 544 .nodata(1h) 730 .nodata(15m) 734 .max(#2)

So, maybe we could improve value cache usage to avoid accessing DB in such cases?

- mentioned in

-

Page Loading...