|

Update:

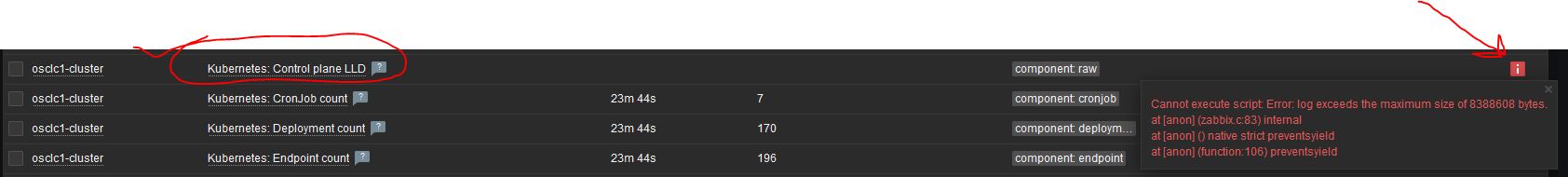

The error message keeps on appearing for ALL discovery items, but readyz and livez.

|

|

Hello Rudolf,

This limit can be modified:

https://git.zabbix.com/projects/ZBX/repos/zabbix/browse/src/libs/zbxembed/embed.h?at=refs%2Fheads%2Frelease%2F6.4#26

Default is 8MB:

Confirming for DEV team to decide if it can be safely increased in upstream code.

Regards,

Edgar

|

|

Thank you very much Edgar!

Depending on the feedback of the DEV team we might consider patching our local build. I hope that the report was useful to you!

|

|

some issue with 6.0.14 on my side with a K8s cluster

|

|

Same with Azure http, sometimes appears the same error. Zabbix 6.4

|

|

I have the same problem with Azure HTTP on Zabbix 6.4.0.

|

|

This should be fixed, workaround is to comment out all Zabbix.log functions from javascript

|

|

@Vladislavs - after commenting in javascript Zabbix.log I get another error: Error: maximum count of HttpRequest objects was reached

|

|

Unfortunately there is no workaround for that seniu123, but is fixed under ZBX-22490

|

|

Is there any information when the next version of zabbix with patches is planned to be released?

|

|

@edgar.akhmetshin, can you also please add version 6.2.9 to the Versions to fix if you closed my #22614 as duplicate of this one?

|

|

Same problem with AKS

|

|

Available in versions:

|

|

|

|

Looking for a fix in the 6.2.X chain also! Solved for now by commenting out the log statements as we have a huge AWS EKS cluster.

|

|

Please upgrade the helm chart with a new version, 6.4.1 has the same issue even the server has been already upgraded.

|

|

I still get this error after upgrade to 6.0.17 (zabbix.com Ubuntu package):

Update: This was because zabbix-proxy inside Kubernetes was still 6.0.16. Updating that also fixes the issue.

|

|

Hi,

i'm having the same problem even after the upgrade to 6.4.2

I'm using openshift container platform stable 4-10.

I have used the chart helm for zabbix 6.4 version 1.2.3 (zabbix-helm-chrt-1.2.3.tgz)

[ the 1.2.5 has zabbix proxy version to 6.0 why?  {}|https://cdn.zabbix.com/zabbix/integrations/kubernetes-helm/6.4/zabbix-helm-chrt-1.2.3.tgz] {}|https://cdn.zabbix.com/zabbix/integrations/kubernetes-helm/6.4/zabbix-helm-chrt-1.2.3.tgz]

|

|

The problem still exists for 6.4.3 version.

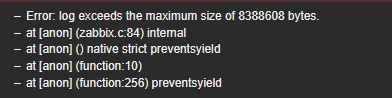

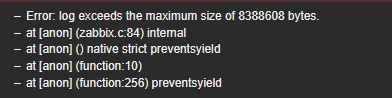

Cannot execute script: Error: log exceeds the maximum size of 8388608 bytes.

at [anon] (zabbix.c:83) internal

at [anon] () native strict preventsyield

at [anon] (function:239) preventsyield

UPD:

My bad, after re-link the template everything works.

|

|

Make sure all your proxies have been upgraded too.

|

|

the problem is still existing if you have zabbix server, zabbix proxies and zabbix agents on 6.0.18 but only with the updated template for cluster state and only kube-state-metrics item.

Proxy with new template is also using a huge amount of resources and goes OOM.

|

|

Hi,

i have updated the chart helm two days ago. I'm using the version 1.2.4 , with zabbix version 6.4.3 and all is working correctly.

I have seen today that are presents other two charts... 1.3.0 and 1.3.1. ( https://cdn.zabbix.com/zabbix/integrations/kubernetes-helm/6.4/ )

the changelog is avaiable here: https://git.zabbix.com/projects/ZT/repos/kubernetes-helm/browse?at=refs%2Fheads%2Fmaster

For me all is working good now. I have had only to change a macro into the hosts that use the template "Kubernetes nodes by http" .

The macro is : {$KUBE.API.ENDPOINT.URL} that now MUSTE BE https://api.xxx.xxx:6443 intead of https://api.xxx.xx:6443/api

( so remove the "/api" at the end)

otherwise the discovery rules of the pod insn't working

Mu openshift cluster is 4.10.47 build ( stable)

I'll test the latest helm chart next week. The major changes seems to be the kube-state-metrics pod ,updated.

stay tuned and cross finger!

ADDENDUM: the memory usage of the proxy, now, has growt to much. from about 400/500 MB of memory is now about 6800 MB..

|

|

Hi DevTeam,

as I can see and also from our test the problem seems still presento on Zabbix Server 6.0.17.

There are something that we can do to troubleshoot it?

Thanks so much

|

|

I have updated the chart to the latest avaiable.... 1.3.2 that use the updated kube-state-metrics, but... the memory used by the zabbix proxy is too high!

After the 3 hours of uptime of the proxy has already reached 7 GB of memory usage...

Yesterday it has reached 18 Gb of memory... and i have deleted the Pod... so rebuilding it starts from a low memory...

Is it a know issue? what is possible to do for debugging better ?

|

|

It can still happen with web hook test if there are too much logs being writtendimitri.bellini, is that the case ?

|

|

Ok guys, for us the problem it's fixed...sorry

In our Test enviroments, based on Rancher with Kube using Zabbix Server 6.0.17 after upgrade to the latest Chart (1.3.2) and with the updated Zabbix Proxy (6.0.17) inside the Kube.

So everything was related to a not updated Proxy and Chart.

Thanks so much

|

|

Just to be clear...

With the latest chart it's solved the problem :

Cannot execute script: Error: log exceeds the maximum size of 8388608 bytes.

at [anon] (zabbix.c:83) internal

at [anon] () native strict preventsyield

at [anon] (function:239) preventsyield

BUT, it's not solved the huge memory usage of the proxy, for me. (8gb of memory from 8.06 AM to 11:42 AM, and it continues to go up until out of memory and pod terminating )

|

Generated at Thu Feb 19 19:28:46 EET 2026 using Jira 10.3.13#10030013-sha1:56dd970ae30ebfeda3a697d25be1f6388b68a422.